The Future of Data Centers: Infrastructure, Efficiency, and Sustainable Growth in the AI Era

August 31, 2025

In today’s AI-driven world, data centers sit at the heart of nearly every digital service—and yet their rapid evolution poses a three-fold challenge: managing ever-higher rack densities, securing the massive power they require, and doing so without exhausting water supplies or undermining climate goals. This survey unpacks how the industry is responding—from the rise of specialized accelerators and liquid-cooling breakthroughs to the geopolitical stakes of site selection and the financial mechanics that underwrite it all.

Over six sections, we:

- Trace the foundations of modern design (DPUs, hyperscale vs. colocation)

- Dive into cooling and efficiency innovations that push PUEs below 1.1

- Examine regional case studies—Switzerland, Finland, the UK, Louisiana—to reveal local constraints and strategies

- Highlight the sustainability “trifecta” of water scarcity, grid flexibility, and emission-free power, with lessons from the Nordics

- Explore the securitization boom, depreciation-versus-revenue math, and the looming risk of an AI-infrastructure bubble

- Synthesize emerging themes (edge computing, modular pods, demand-response grids) and pose the pivotal questions—regulatory, technological, geopolitical—that will shape the next decade.

Whether you’re an IT executive, infrastructure investor, or policy maker, this survey offers a concise yet comprehensive guide to the forces redefining data-center scale, sustainability, and resilience.

Section 1 – Foundations of Modern Data Centers

1.1 The Rise of the Data Processing Unit

In the last decade, Data Processing Units (DPUs) have upended conventional data center design by offloading networking, storage, and security functions from server CPUs. What began as proprietary smart NICs in hyperscale clouds has matured into a standardized hardware layer championed by NVIDIA (BlueField), Intel (IPU), and AMD (Pensando), with software orchestration from VMware’s Distributed Services Engine and Red Hat OpenShift’s DPU operator. Today, reference architectures from NVIDIA (AI Cloud), Gartner (AI Edge), and Cisco (Hypershield) embed DPUs as mandatory components—underscoring their critical role in securing high-bandwidth AI pipelines and enforcing multi-tenant isolation at scale.

1.2 Hyperscale and Hybrid Infrastructure

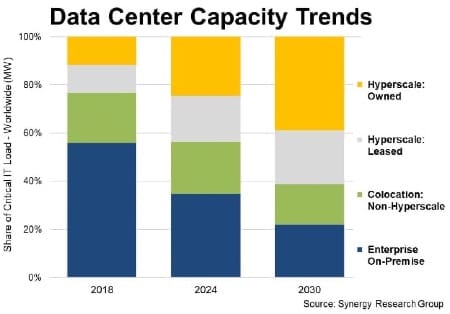

Hyperscale operators now run 1,189 facilities worldwide, accounting for 44 percent of global data-center capacity. By 2030, their share is projected to grow to 61 percent, while colocation and on-premise enterprise sites will shrink in relative terms (see Table 1).

Segment | Q1 Share | 2030 Forecast |

Hyperscale | 44 % | 61 % |

Colocation | 22 % | 17 % |

On-Premise | 34 % | 22 % |

Table 1- Share of Global Data-Center Capacity

Key drivers of this shift include:

- Soaring demand for cloud services, big-data analytics, and AI workloads

- The need to scale power and cooling for GPU-heavy clusters

- Real-estate and permitting constraints in mature metros

- Expansion into emerging markets with favorable land, energy, and policy conditions

Regionally, the United States leads in company-owned hyperscale campuses, while APAC and EMEA still rely more on leased or third-party facilities.

1.3 The Evolving Colocation Market

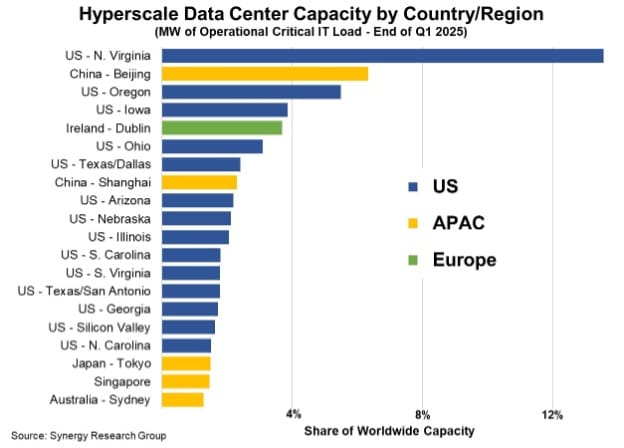

While hyperscale campuses expand, colocation remains vital for enterprises seeking turnkey capacity. The ten largest metro markets still generate 41 percent of global colocation revenues, growing at 8 percent annually, whereas tier-2 and tier-3 cities capture 39 percent of revenues and expand at 12–17 percent per year (see Tables 2 and 3).

Market Tier | Revenue Share |

Top 10 Metros | 41 % |

Next 30 Metros | 20 % |

Following 30 Metros | 19 % |

All Others | 20 % |

Table 2- Colocation Revenues by Market Tier

Market Tier | Growth Rate |

Top 10 Metros | 8 % |

Next 30 Metros | 12 % |

Following 30 Metros | 17 % |

Table 3- Average Annual Growth by Market Tier

Northern Virginia leads the ranking, followed by Beijing, Shanghai, London, and Tokyo. Meanwhile, growth hotspots like Lagos, Warsaw, Dubai, Austin, and Kuala Lumpur demonstrate how power availability, real-estate affordability, and regulatory flexibility drive expansion into emerging markets.

These three pillars—DPUs that redefine computational layers, hyperscale architectures that reshape capacity distribution, and a dynamic colocation market that offers rapid scalability—form the architectural bedrock of modern cloud-native ecosystems.

Section 2 – Cooling & Efficiency: Engineering the Next Generation

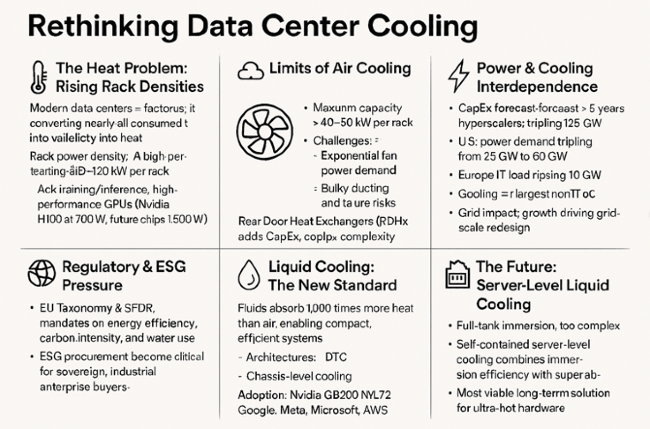

2.1 Framing the Thermal Challenge

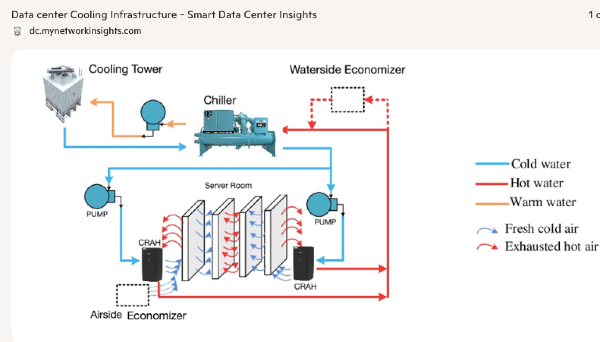

Data centers convert nearly every watt of electricity they consume into heat, and AI-driven rack densities have surged from around 10 kW to over 120 kW per rack—far beyond the reach of traditional air cooling. Cooling now ranks as the second-largest capital expenditure after power infrastructure and the single biggest operational cost outside of IT equipment. Even leading hyperscale operators—AWS, Google, Meta—pushing air-side economization and thermal containment techniques struggle to lower Power Usage Effectiveness (PUE) below 1.4–1.6, while their best halls approach 1.1.

At the same time, data-center electricity demand is set to triple in the U.S. (from 25 GW to 80 GW) and more than triple in Europe (from 10 GW to 35 GW) over the next five years. Regulatory regimes such as the EU Taxonomy for Sustainable Activities and the Sustainable Finance Disclosure Regulation now mandate strict targets for energy efficiency, carbon emissions, and water use. Against this backdrop, incremental tweaks to air-based systems no longer suffice—data centers must adopt transformative cooling technologies to sustain AI-driven growth without breaching environmental or economic limits.

2.2 Liquid Cooling Technologies

2.2.1 Direct-to-Chip Liquid Cooling (DCLC)

Direct-to-Chip Liquid Cooling uses cold plates mounted on CPUs, GPUs, and memory to capture up to 80 percent of heat at the source. By eliminating heat before it enters the air stream, DCLC slashes chiller and CRAC loads, enabling rack densities of 30–80 kW with PUEs below 1.2. Early deployments by major cloud providers prove its potential, though retrofitting existing halls carries higher CapEx and operational complexity.

2.2.2 Full-Tank Immersion (FTI)

Full-Tank Immersion submerges server assemblies in dielectric fluids that uniformly absorb heat. Single-phase systems—such as Alibaba’s—have hit PUEs around 1.07, cutting energy use by over 35 percent. Two-phase designs promise further gains once standardization and scale challenges are addressed. While FTI delivers maximum thermal efficiency and compact footprints, its higher maintenance requirements and integration complexity remain hurdles.

2.2.3 Cooling Distribution Units (CDUs)

Cooling Distribution Units serve as the bridge between plant chillers and liquid-cooled racks. These modular, closed-loop modules regulate coolant flow, detect leaks in milliseconds, and integrate with building-management systems. CDUs enable operators to pilot DCLC alongside legacy air-cooled rows, then phase in immersion bays as budgets and layouts permit.

2.3 Operational Optimization and Advanced Storage

Liquid cooling dovetails with AI-driven operational tuning. Advanced algorithms optimize coolant flow, airflow, power distribution, and predictive maintenance in real time—incremental gains that compound at scale. Waste-heat recovery systems and lithium-ion battery microgrids smooth peak loads and recycle thermal energy back into campus operations.

Not every workload demands peak infrastructure. Tiered storage architectures classify data as “hot,” “warm,” or “cold,” matching each tier to the appropriate hardware: high-performance SSDs for AI training, dense HDD arrays for analytics, and low-power archival media for infrequent access. Emerging drive technologies—HAMR and Mozaic platforms—boost bits per spindle, reducing watts per petabyte and easing raw-material pressures.

2.3.1 AI-Driven Operational Tuning

Machine-learning algorithms now manage coolant flows, fan speeds, power distribution, and predictive maintenance in real time. These incremental gains compound across thousands of racks, squeezing every watt of efficiency from liquid-cooled clusters.

2.3.2 Waste-Heat Recovery & Energy Storage

By piping server waste heat into district-heating networks and deploying on-site battery microgrids, data centers smooth peak loads, store excess renewable energy, and recycle thermal output back into campus HVAC systems.

2.3.3-Tiered Storage & High-Density Drives

Workloads stratify into “hot” (AI training on NVMe SSDs), “warm” (analytics on dense HDD arrays), and “cold” (archives on low-power tape or object storage). Next-gen drives—HAMR and Mozaic platforms—boost bits per device, slashing watts per petabyte and easing supply-chain pressures.

2.3.4 Geopolitical and Regulatory Dimensions

• The U.S. funnels state and corporate investment into high-density storage, renewable integration, and proprietary cooling tech as a matter of national resilience.

• Europe leads with stringent sustainability standards, carbon reporting, and circular-economy mandates that shape global procurement and operational norms.

• China pursues scale-first expansion with state-backed data-park builds, leveraging domestic rare-earth dominance to drive down hardware costs, then optimizing for efficiency.

• Control of critical minerals and “watts-per-petabyte” metrics has become a strategic asset in the global AI infrastructure race.

These combined approaches—liquid cooling to conquer heat, AI orchestration to fine-tune operations, tiered storage to avoid over-provisioning, and regional renewables to cut carbon—enable data centers to push densities above 100 kW per rack while driving PUEs toward 1.05. The next section will explore how these technical breakthroughs intersect with economics, policy, and the global quest for truly sustainable infrastructure.

2.4 Transition to Regional Case Studies

With transformative cooling and AI-driven efficiency strategies mapped out, the next question becomes: how do these innovations play out on the ground? Section 3 will examine regional case studies—from Switzerland’s energy-smart hubs to Finland’s geopolitically charged builds and the UK’s sustainability balancing act—showing how local context shapes the adoption of these next-gen infrastructures.

Section 3 – Regional Case Studies: Opportunities, Geopolitics, and Community Impacts

With transformative cooling and AI-driven efficiency strategies mapped out, the next question becomes: how do these innovations play out on the ground? we will examine regional case studies—from Switzerland’s energy-smart hubs to Finland’s geopolitically charged builds and the UK’s sustainability balancing act—showing how local context shapes the adoption of these next-gen infrastructures.

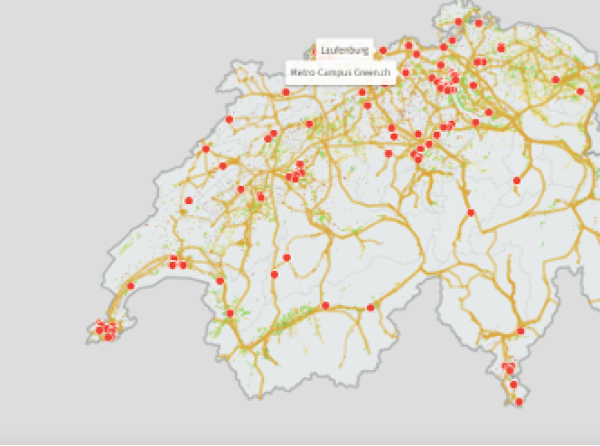

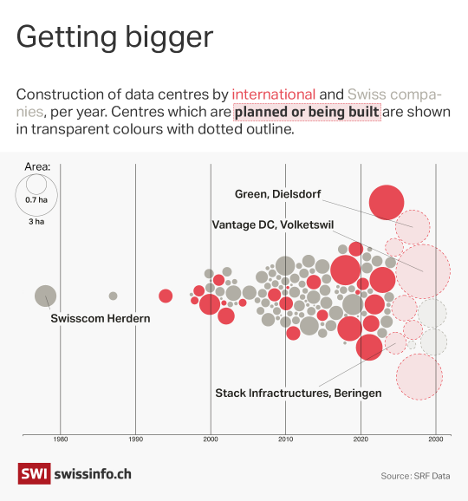

3.1 Switzerland’s Data Center Boom – A Premium Hub at a Crossroads

Switzerland has rapidly emerged as a premier data-center hub, drawing investment thanks to its political neutrality, stringent data-protection framework, and world-class fiber-optic and power infrastructure. Proximity to economic centers like Zurich and Geneva guarantees low-latency connectivity, while the Alpine climate and abundant lakes enable energy-efficient cooling. Global players such as Microsoft and Google have poured billions into Swiss campuses, with AI workloads driving fresh demand for high-performance facilities.

Yet this expansion places heavy demands on local resources: the new Dielsdorf campus is projected to consume seven times the electricity of its host municipality, the Beringen site withdraws 55 000 m³ of potable water annually, and prime farmland is being repurposed for construction. Most centers still lack waste-heat recovery systems, missing opportunities to feed district-heating networks and reduce overall environmental impact.

Resource | Key Metric | 2030 Projection |

Electricity | Dielsdorf campus draws 7x municipality usage | 15 % of national demand |

Water | Beringen site uses 55 000 m³/year (≈110 Olympic pools) | Continued high withdrawal |

Land | Conversion of prime farmland in Dielsdorf | Ongoing urbanization |

Waste Heat | Limited district-heating integration (Winterthur only) | Majority remains untapped |

Table 1- Switzerland Data Center Resource Impact

3.2 Finland’s Data Center Gamble: Local Growth Meets Global Geopolitics

The €200 million Hyperco facility in Kouvola illustrates how a small town’s economic revival can collide with geopolitics. Finland’s sub-zero winters and abundant lake water slash cooling costs, and stable governance attracts major projects. However, ByteDance (TikTok’s parent) as the anchor tenant triggered national-security alarms: Finland lies outside U.S. export controls on advanced AI chips, potentially creating a backdoor for restricted semiconductors. Complicating matters, Hyperco is owned by Emirati tycoon Hussein Al-Sajwani’s Damac Group—an investor known for ties to former U.S. politics. Finnish authorities now face a stark choice: block the project and forgo jobs and infrastructure upgrades, or proceed and risk embedding a strategic rival into critical Western infrastructure.

Aspect | Details |

Investment | €200 million |

Operator | Hyperco / Edgnex (Damac Group) |

Anchor Tenant | ByteDance (TikTok) |

Climate & Water | Sub-0 °C winters; abundant lake water |

Security Concerns | Data sovereignty; potential sanctions-evasion via AI chips |

Geopolitical Links | U.S.–China rivalry; Gulf investor ties to U.S. politics |

Table 2- Finland Kouvala Data Center at a glance

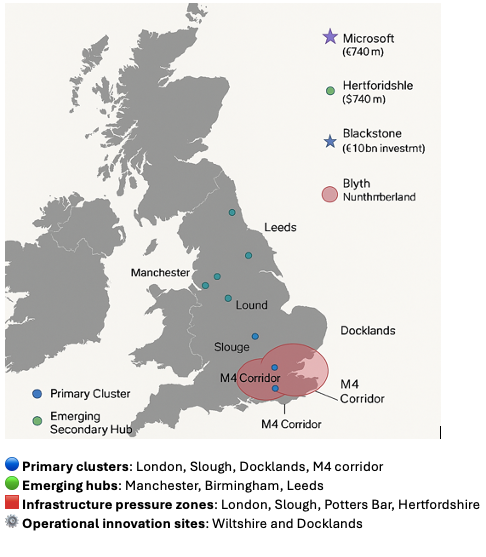

3.3 The UK’s Data Centre Expansion: Promise, Pressures, and Strategic Dilemmas

The United Kingdom hosts roughly 477 data centers—third largest in the world—and is set to add nearly 100 more by 2030. London’s Slough corridor remains Europe’s densest cluster, while the Docklands and M4 corridor support finance and AI workloads. Grid saturation around London is prompting operators to explore secondary hubs in Manchester, Birmingham, and Leeds. UK data centers already consume 2–3 % of national electricity (potentially up to 12 % when indirect loads are included), and requests for 150 MW grid connections threaten to delay residential developments. To guarantee uptime, many facilities are installing on-site natural-gas turbines—a cleaner alternative to diesel but at odds with net-zero targets. Water usage and cooling pose further challenges as rack densities exceed 40 kW, with community objections mounting over potable water withdrawals and environmental impact. Pilot projects in Docklands capture waste heat for district heating, though coverage remains limited.

Metric | Current Value | 2030 Projection |

Total Facilities | 477 | ~575 |

New Facilities by 2030 | — | ~100 |

Electricity Share (direct) | 2–3 % | +71 TWh over 25 yrs |

Table 3- Meta's Hyperion: AI Power Demands Drive Gas Expansion in Louisiana

3.4 Meta’s Hyperion: AI Power Demands Drive Gas Expansion in Louisiana

Meta’s “Hyperion” campus in Richland Parish spans 4 million sq ft and could draw up to 5 GW of electricity—comparable to Manhattan’s entire load. Entergy Louisiana will meet this demand by building three natural-gas plants totaling 2.3 GW, extending transmission lines, and procuring 1.5 GW of solar capacity, with Meta covering its share of capital costs. While Meta pledges not to burden other customers and to match consumption with renewables, critics warn of higher utility bills, increased greenhouse-gas emissions, and water-use strains. Despite local pushback, the Louisiana Public Service Commission fast-tracked approval to retain Meta’s investment, and Entergy’s stock price rose on expectations of higher earnings.

Metric | Value | Notes |

Peak Electricity Demand | Up to 5 GW | Comparable to Manhattan’s power load |

Gas Generation Capacity | 2.3 GW | Three new Entergy natural-gas plants |

Solar Procurement | 1.5 GW | Meta’s matching renewables commitment |

Facility Footprint | 4 million sq ft | Largest single-site AI data center |

Regulatory Approval | Fast-tracked | Louisiana PSC action to retain investment |

Table 4 - Hyperion Energy Infrastructure Breakdown

Collectively, these case studies reveal how regional strengths—political stability, climate, infrastructure, regulation—and local constraints shape data-center strategies. From Switzerland’s sustainability crossroads to Finland’s geopolitical gambit, the UK’s balancing act, and Louisiana’s gas-driven power build-out, each example highlights the interplay between growth, sovereignty, and sustainability. Section 4 will deepen this analysis by examining the systemic stress points of water scarcity, grid instability, and the imperative for emission-free energy, drawing on integrated models such as the Nordic approach to guide sustainable expansion worldwide.

These case studies—from Switzerland’s resource strains and Finland’s sovereignty dilemma to the UK’s grid bottlenecks and Louisiana’s gas-driven build-out—underscore a common truth: no region can expand digital infrastructure indefinitely without confronting hard limits on water, power, and emissions.

Section 4 – Sustainability Stress Points: Water, Power, and Emission-Free Energy

We'll exam how water scarcity, grid instability, and the imperative for truly emission-free energy define the next battleground for sustainable data-center growth, and explore integrated frameworks (like the Nordic model) that aim to reconcile scale with environmental stewardship.

4.1 The Critical Trifecta: Introduction

As AI and cloud computing reshape our world, data centers now contend with three intertwined constraints:

- Water scarcity, driven by rising cooling demands and disrupted rainfall patterns

- Grid instability, exposed by unprecedented electricity loads

- Emission-free power, the imperative to decarbonize at scale

Meeting all three is essential for sustainable growth. The Nordic region—boasting cold climates, abundant renewables, and integrated district-heating networks—offers a blueprint for reconciling these challenges.

4.2 Water Scarcity: Europe’s Parched Future

Climate change has turned Europe’s data-center water needs into a critical vulnerability. Heatwaves bake the soil, preventing aquifer recharge, while AI workloads alone will demand an estimated 4.2–6.6 billion m³ of cooling water annually by 2027—more than Denmark’s total national use. Mid-sized facilities already draw around 1.4 million liters per day. Without closed-loop systems or non-potable sources, operators face regulatory caps that could drive new builds offshore.

Metric | Value |

AI Cooling Demand (2027 forecast) | 4.2–6.6 billion m³/year |

Denmark’s Total Annual Use | ~4 billion m³/year |

Avg. Mid-Sized Facility Daily Use | 1.4 million L/day (~300 000 gal/day) |

Regulatory Risk | EU water-use limits; relocation threats |

Table 1 – Water Demand vs. National Benchmarks

4.3 Grid Instability: The Power-Flexibility Crisis

Meanwhile, the AI revolution’s voracious appetite for electricity has utilities defaulting to new fossil-fuel plants—an expensive, polluting response that shoppers could end up paying for through higher rates. Legal scholars Alexandra Klass and Dave Owen argue for a radical rethink: treat hyperscale data centers as a “flexible” grid customer class. Under interruptible contracts—akin to Western water-rights or industrial gas deals—operators would secure faster connections in exchange for agreed power reductions during grid stress, leveraging their ability to shift non-critical workloads across time zones and regions. Early pilots in Ohio (a dedicated rate class) and Nevada (a geothermal power deal with Google) hint that this demand-response model could head off both blackouts and unnecessary gas-plant booms.

Scenario | Demand | Grid Response |

Meta Hyperion (LA) | up to 5 GW | 2.3 GW gas plants + 1.5 GW solar |

UK Hyperscale Campus | 150 MW | On-site gas turbines + network upgrades |

Flexible-Customer Model | Variable | Interruptible contracts; demand-response |

Table 2 – Power Demand Scenarios & Grid Strategies

4.4 Emission-Free Energy: The Nordic Blueprint

Yet perhaps the most compelling path forward comes from the Nordic region. There, 100 percent renewable grids (hydro, wind, geothermal), naturally cold air, and district-heating networks that capture and repurpose waste heat create a balanced ecosystem where high-density computing coexists with environmental stewardship. Closed-loop and lake-water cooling slashes potable water withdrawals, renewable overcapacity paired with battery storage enables genuine demand-response, and the integration of data-center waste heat into local heating systems turns a liability into a community asset.

Challenge | Nordic Feature | Benefit |

Water Consumption | Lake-water & closed-loop cooling | Reduces potable water use |

Grid Instability | Renewable overcapacity & battery banks | Enables demand-response & peak shaving |

Emission-Free Power | 100 % hydro/wind mix | Virtually zero operational carbon |

Waste-Heat Utilization | District-heating integration | Offsets community heating demand |

Table 3 – Trifecta Challenges vs. Nordic Solutions

Water limits, grid flexibility, and clean-energy sourcing now drive site selection, cost structures, and risk profiles. In Section 5, we’ll explore how these sustainability imperatives reshape financing—from securitization vehicles fueling data-center bonds to emerging debates on ROI in AI-heavy facilities—and highlight the financial strategies that align growth with environmental resilience.

Section 5 – Economics & Investment: Market Forces and Financial Risks

Data centers have become the cornerstone of digital‐infrastructure securitization, anchoring a $79 billion market in which they account for 61 percent of asset‐backed securities. Fiber networks and cell towers split the remainder at 20 percent and 18 percent, respectively. Landmark financings—Meta’s $29 billion deal and Vantage’s $22 billion loan—underscore how massive AI and cloud‐service expansions are driving lenders and banks to underwrite ever‐larger transactions.

Asset Class | Market Share |

Data Centers | 61% |

Fiber Infrastructure | 20% |

Cell Towers | 18% |

Table 1 – Digital Infrastructure Securitization Market Composition

Transaction | Size |

Meta Securitization | $29 B |

Vantage Data Centers Loan | $22 B |

Table 2 – Landmark Data-Center Financings

Investor confidence in this sector has been bolstered by robust cloud-revenue growth at Microsoft, Alphabet, Amazon, and Meta. Their continued capital spending pledges have tightened risk premiums on data-center ABS dramatically over the past two years. Yet Bank of America analysts warn that most spread compression has run its course, even as data-center tranches remain relatively attractive compared to other ABS categories.

Underneath this securitization boom lies a stark imbalance: the annual depreciation cost of newly built AI-optimized data centers is estimated at $40 billion, while the revenue they generate tops out at $15–20 billion. To break even, these facilities would need to boost revenue by a factor of ten—requiring nearly $480 billion in a single year, equivalent to every Netflix subscriber on Earth paying a subscription fee for AI services.

Metric | Value |

Annual Depreciation Cost | $40 billion |

Projected Annual Revenue | $15–20 billion |

Required Break-Even Revenue | ≈$480 billion |

Implied Global Subscriber Base | ~3.7 billion people |

Table 3 - AI Data Center Economics at a Glance

This math points to a precarious bubble. Harris Kupperman of Praetorian Capital calls it “massive capital misallocation,” warning there simply isn’t enough revenue in the world to justify current build-out rates. Texas’s private‐project cost forecasts jumping from $130 million to $1 billion in two years illustrate how swiftly and unpredictably capex can escalate.

Unless AI-driven services discover new, lucrative revenue streams—or costs of compute and power plummet through breakthrough technologies—investors and lenders face looming write-downs. The next phase of the market will test whether data centers can evolve from securitized “safe assets” into genuinely profitable ventures or become stranded “white elephants” in portfolios that bet too heavily on limitless AI growth.

Section 6 – Synthesis & Future Directions

6.1 Recap of Key Insights

- Foundations: DPUs, Hyperscale & Colocation

Data Processing Units now offload networking, storage, and security from CPUs, reshaping server architectures. Hyperscale campuses have grown to 44 % of global capacity (projected to 61 % by 2030), while colocation hubs supply flexible capacity in top metros and fast-growing tier-2/3 markets. - Cooling & Efficiency

Traditional air cooling caps out near 40–50 kW/rack. Liquid-cooling technologies—direct-to-chip, full-tank immersion, and modular CDUs—unlock densities above 100 kW with PUEs as low as 1.07. AI-driven tuning and waste-heat recovery further squeeze efficiency gains. - Regional Case Studies

Switzerland leverages neutrality and Alpine cooling but strains water, power, and land. Finland’s Kouvola project spotlights sovereignty risks tied to foreign investment and chip-export loopholes. The UK balances nearly 100 new builds against grid and water constraints, often resorting to on-site gas. Meta’s Hyperion in Louisiana exemplifies the AI-power surge driving new fossil-fuel plants despite renewable pledges. - Sustainability Stress Points

Europe faces acute water scarcity, AI cooling alone demanding 4.2–6.6 billion m³/year. The “flexible customer” grid model proposes interruptible contracts in exchange for accelerated connections. The Nordic region offers a holistic solution: 100 % renewables, lake-water cooling, storage, and district-heating integration. - Economics & Investment

Data centers anchor a $79 billion securitization market, but underlying assets depreciate at $40 billion/year against $15–20 billion in revenue. Without new revenue streams or cost breakthroughs, investors risk a valuation reckoning.

6.2 Emerging Themes

- Edge Computing Fabric

Micro-data centers and prefabricated pods extend hyperscale performance to the network edge, reducing latency and core congestion. - Flexible Grid Integration

Interruptible power contracts and demand-response programs transform data centers into adaptive grid partners rather than unlimited load drivers. - Next-Generation Cooling

Self-contained server-level liquid cooling and immersion systems promise PUEs < 1.1, while modular CDUs enable phased rollouts alongside legacy infrastructure. - Modular & Scalable Designs

Containerized data halls, plug-and-play power and cooling modules, and software-defined orchestration layers support rapid, site-agnostic deployments.

6.3 Open Questions

- Regulation Evolution

Will policymakers mandate flexible power contracts, water-use limits, or waste-heat reuse? How quickly can new frameworks adapt to technology advances? - AI-Driven Infrastructure Optimization

Can AI autonomously orchestrate compute placement, cooling loads, and energy sourcing in real time—closing the loop on its own infrastructure footprint? - Role of Emerging Markets

How will second- and third-tier cities in APAC, Africa, and Latin America reshape capacity geography? Can they offer green power, relaxed permitting, and new talent pools without repeating past unsustainable models? - Investment Models & Risk

Will financiers develop revenue-based leasing, use-based pricing, or resilience-linked bonds that align long-term returns with sustainability metrics?

These questions frame the frontier of data-center evolution, where technical innovation, environmental stewardship, and economic viability must converge to power the next wave of AI and digital services.

Download the Full Report (pdf)